In the realm of autonomous vehicles, the choice of sensing technology is critical to achieving safe and reliable operation. LiDAR (Light Detection and Ranging) and camera-based systems are two of the primary technologies used for environmental perception in self-driving cars. Each technology has its strengths and limitations, and understanding their differences is essential for optimizing autonomous driving systems. This article compares LiDAR and camera-based systems, highlighting their respective advantages and challenges.

LiDAR: Precision and Depth

LiDAR is renowned for its ability to provide high-resolution 3D mapping of the environment. By emitting laser pulses and measuring the time it takes for the pulses to return after reflecting off objects, LiDAR creates a detailed 3D representation of the surroundings. This capability offers several key advantages:

Accurate Distance Measurement: LiDAR provides precise distance measurements, which are crucial for detecting and avoiding obstacles. The ability to measure distances accurately helps autonomous vehicles maintain safe following distances and avoid collisions with objects in their path.

Depth Perception: Unlike cameras, which rely on visual interpretation, LiDAR provides direct depth information. This enables the system to differentiate between objects based on their distance from the vehicle, improving the accuracy of object detection and classification

Performance in Low Light and Adverse Conditions: LiDAR is effective in low light conditions, such as at night or during fog, where cameras may struggle. Its performance in various environmental conditions makes it a reliable choice for ensuring consistent operation.

However, LiDAR also faces some challenges:

Cost: LiDAR systems have historically been expensive, though costs have been decreasing with technological advancements. High prices can be a barrier to widespread adoption, especially in mass-market vehicles.

Complexity and Integration: LiDAR systems can be complex to integrate with other sensors and vehicle systems. Effective sensor fusion is required to combine LiDAR data with information from cameras and radar, which can add to the overall system complexity.

Camera-Based Systems: Visual Understanding

Camera-based systems use visual information captured by cameras to interpret the environment. These systems rely on image processing algorithms to detect and recognize objects, road signs, and lane markings. The advantages of camera-based systems include:

Rich Visual Detail: Cameras capture rich visual information, including color and texture, which is useful for identifying and interpreting road signs, traffic lights, and lane markings. This visual detail is important for understanding the context of the environment.

Lower Cost: Cameras are generally less expensive than LiDAR systems, making them a cost-effective option for many autonomous vehicle applications. This lower cost can contribute to more affordable autonomous driving solutions.

Wide Availability: Cameras are widely available and can be easily integrated into vehicle designs. They are already used in various advanced driver-assistance systems (ADAS), such as lane-keeping assist and automatic emergency braking.

However, camera-based systems also have limitations:

Limited Depth Perception: Cameras provide visual information but lack direct depth measurement. Depth perception must be inferred from image data, which can be challenging in complex scenarios or when objects are at different distances.

Sensitivity to Lighting Conditions: Camera performance can be affected by lighting conditions, such as glare, shadows, or low light. This sensitivity can impact the system’s ability to accurately detect and recognize objects in various environments.

Data Processing Requirements: Processing camera data requires sophisticated algorithms and significant computational power. The need for real-time image processing can increase the overall system’s complexity and processing demands.

Combining LiDAR and Cameras: A Synergistic Approach

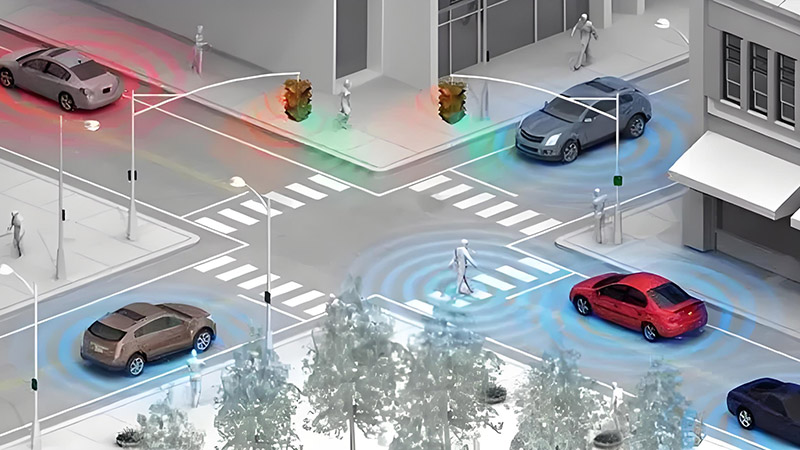

Many autonomous vehicle systems use a combination of LiDAR and camera-based technologies to leverage the strengths of each. This approach, known as sensor fusion, integrates data from multiple sources to create a more comprehensive and accurate perception of the environment.

Enhanced Object Detection: Combining LiDAR’s depth information with camera’s visual detail allows for more accurate object detection and classification. For example, LiDAR can provide precise distance measurements, while cameras can offer visual context for interpreting the type and appearance of objects.

Improved Reliability: Using multiple sensors helps to mitigate the limitations of individual technologies. For instance, if one sensor is affected by adverse conditions, other sensors can provide complementary data to maintain reliable operation.

Robust Decision-Making: Sensor fusion enhances the decision-making capabilities of autonomous systems by providing a richer and more accurate understanding of the environment. This can improve the vehicle’s ability to navigate complex scenarios and respond to unexpected situations.

Conclusion

LiDAR and camera-based systems each offer unique advantages and challenges for autonomous vehicles. LiDAR provides precise depth perception and reliable performance in various conditions, while cameras offer rich visual detail and cost-effectiveness. The combination of both technologies through sensor fusion represents a promising approach for achieving optimal performance and safety in autonomous driving. As technology continues to advance, the integration of LiDAR and camera-based systems will play a key role in the development of reliable and effective autonomous vehicles.